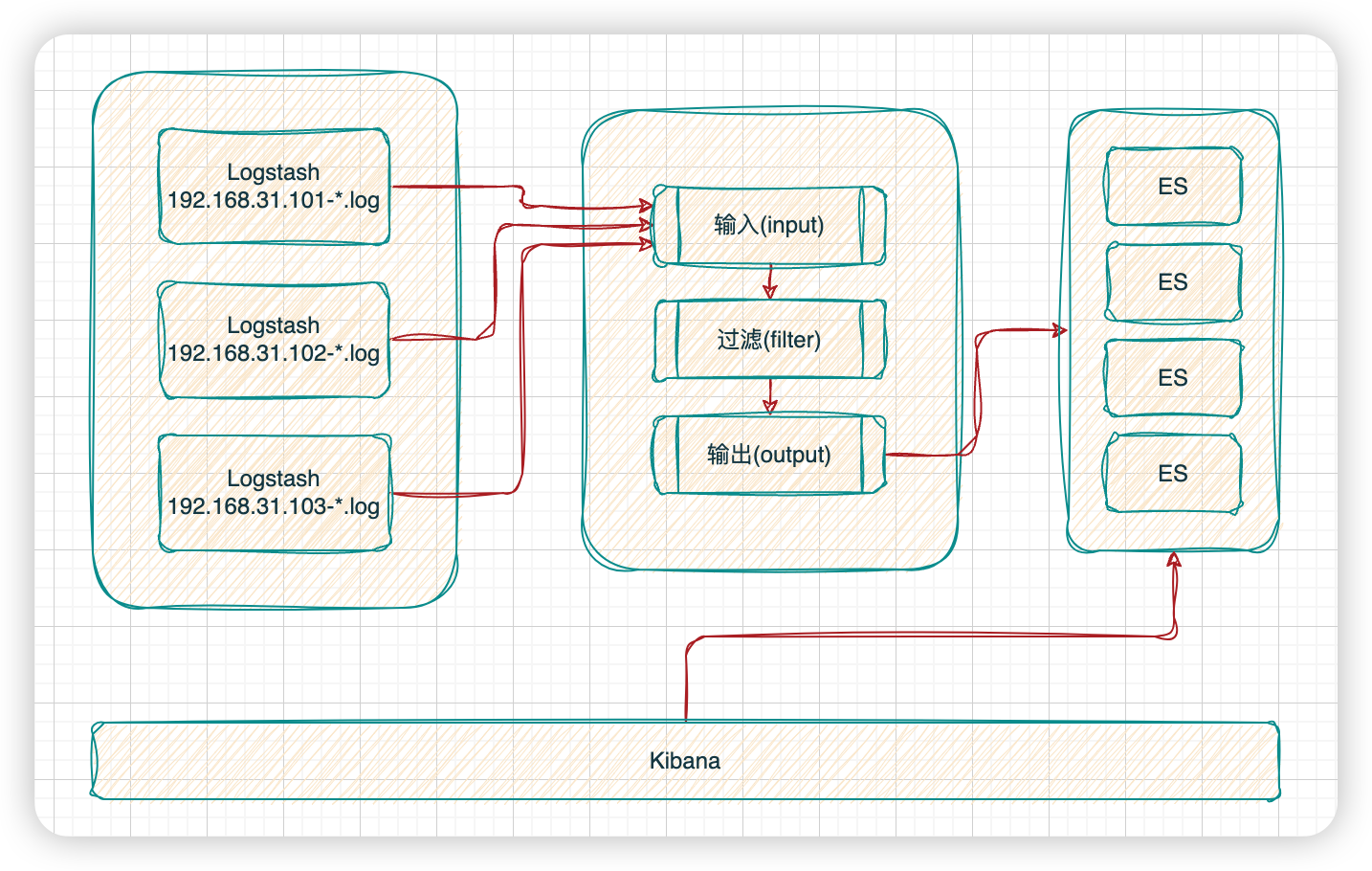

eIk(ES + Logstash + Kibana) 采集日志的原理:

方案一:

1.需要在每个服务器上安装 logstash

2.logstash需要配置固定读取某个日志文件,然后输入到ES中

3.开发者使用kibana连接到es中查询存储日志内容

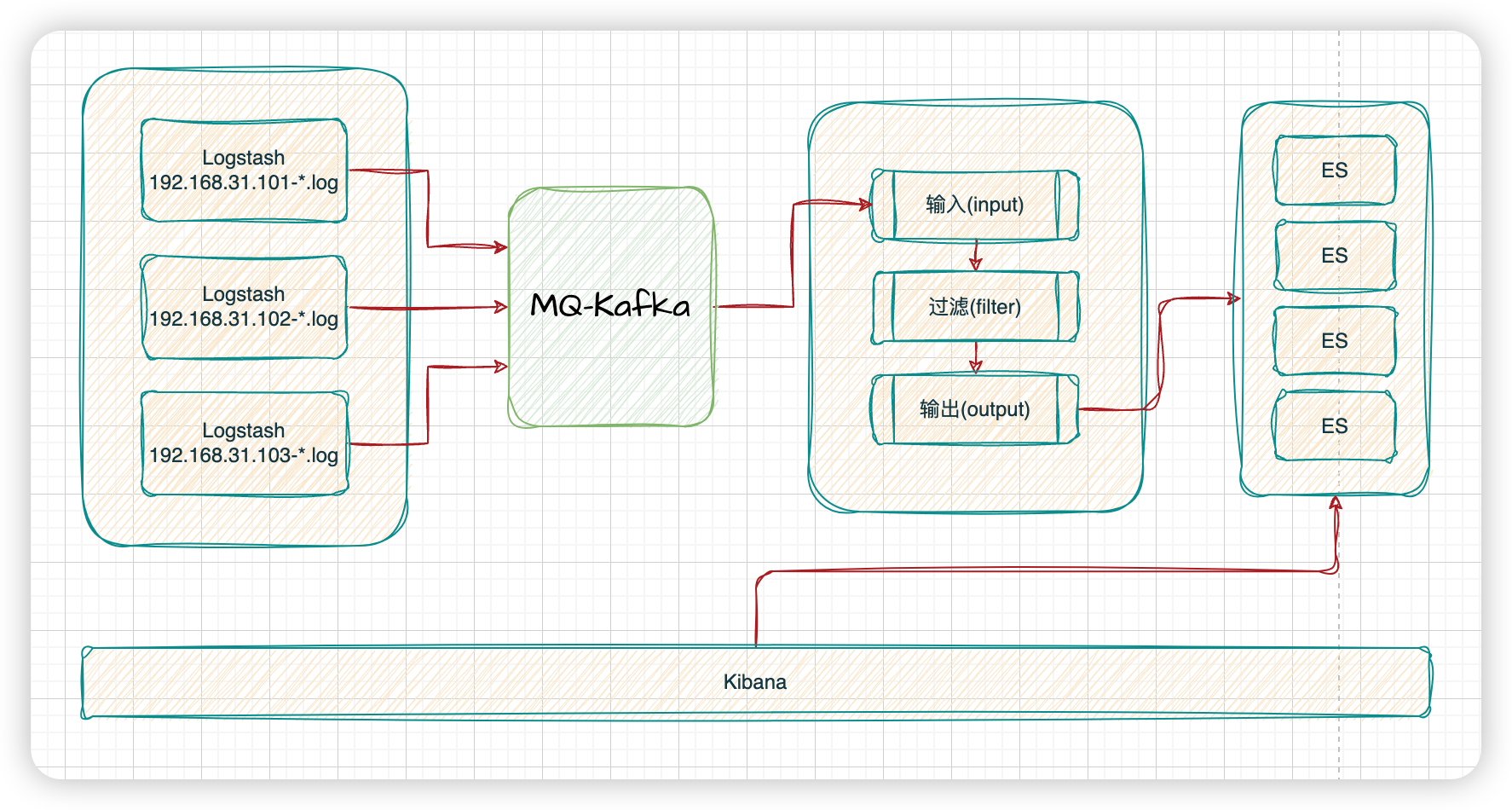

方案二:

elk+kafka

日志不直接由logstash读取,而是应用服务将日志写入MQ(Kafka),然后logstash从kafka中消费

不管哪种方案,ES和Kibana是都要安装的,所以先实现方案一。以下安装均基于docker。ES和Kibana最好都是同一个版本

Elasticsearch

https://hub.docker.com/_/elasticsearch/tags?page=1&name=7.14.0

docker run -d --name elasticsearch \

--net macvlan --ip 192.168.31.97 \

-v /home/myfiles/docker/elasticsearch/config/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml \

-v /home/myfiles/docker/elasticsearch/data:/usr/share/elasticsearch/data \

-v /home/myfiles/docker/elasticsearch/plugins:/usr/share/elasticsearch/plugins \

-e "discovery.type=single-node" \

-e ES_JAVA_OPTS="-Xms1024m -Xmx1024m" \

docker.io/jasonleemz/elasticsearch:230730

elasticsearch.yml

http.host: 0.0.0.0

xpack.security.enabled: false

Kibana

docker run -itd --name kibana \

--net macvlan \

-e ELASTICSEARCH_HOSTS="http://192.168.31.97:9200" \

-v /home/myfiles/docker/kinaba/config/kinaba.yml:/usr/share/kibana/config/kinaba.yml \

docker.io/jasonleemz/kinaba:230731

kinaba.yml

#

# ** THIS IS AN AUTO-GENERATED FILE **

#

# Default Kibana configuration for docker target

server.host: "0"

server.shutdownTimeout: "5s"

elasticsearch.hosts: [ "http://elasticsearch:9200" ]

monitoring.ui.container.elasticsearch.enabled: true

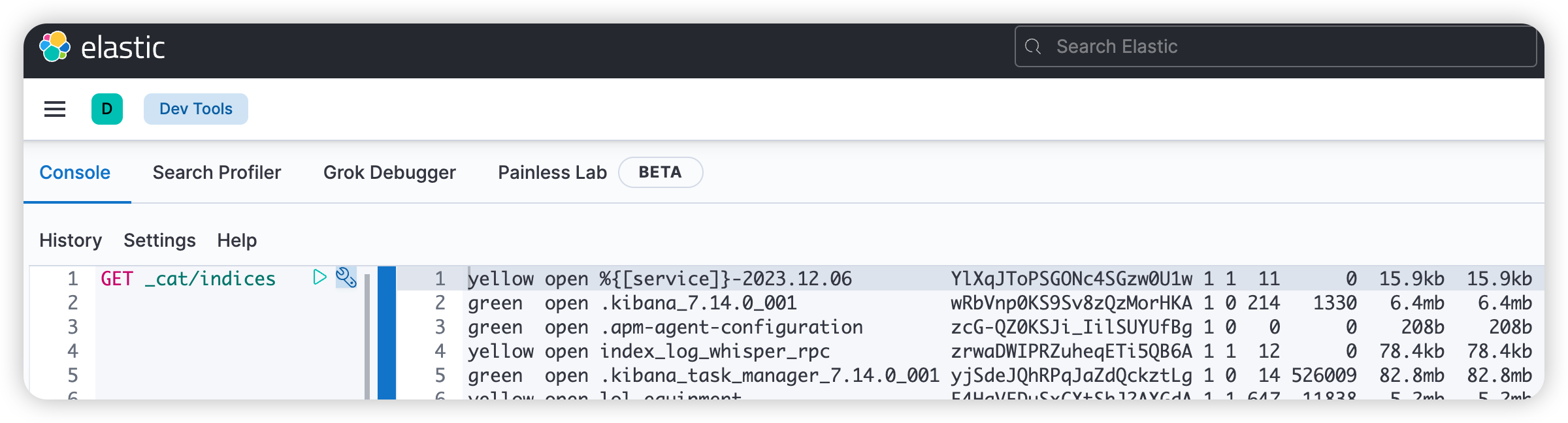

进入Kibana管理后台

Kibana服务的端口是5601,http://kibana:5601/

评论区